71 Google-Ordained SEO Guidelines You May Have Overlooked

by Ajit Singh KularGoogle hasn’t been very open about its algorithmic changes lately. Except for major updates such as Mobile First Index (which is yet to be launched), Panda and some other unconfirmed alterations, there have been very few announcements by Google for SEO/Webmasters. However, evangelists like John Mueller (Twitter : @JohnMu) and Gary Illyes (Twitter : @methode ) have been instrumental in bridging this gap. Barry Schwartz (Twitter : @rustybrick), relentlessly publishes updates, notifications, questions by Webmasters and myriad chatters across the SEO community. Many of us owe big thanks to these mavens who have been selflessly sharing their knowledge with the whole fraternity.

We at CueBlocks have made a similar effort! We have compiled a list of some important Google algorithm updates and official statements, straight from the horse’s mouth. Many of these points clarify the direction that Google wants SEOs to take. These will also serve as a primer for correct and ethical practices in our sphere.

So, let’s get rollin’…

- Do not block CSS and JS files in your Website’s Robots.txt file

Although Google hasn’t aced crawling Javascript, yet it is advised that the Googlebot should be able to access CSS and JS files on your site. You can validate this inside Search Console (fetch and render) feature. - Google ignores special characters in the meta title tag of the website

Many brands are confused about the inclusion of trademark symbols like ™ and © in their title tags. However, with the above statement, Google has put all the speculations to rest. Businesses can do well even without a trademark symbol in their Meta titles. - W3C Code validation is not really a deciding factor for SERPs

As per Google, the W3C validation of a page does not really influence website rankings. Still, we recommend adhering to the fundamentals. - Google has confirmed that one can use multiple H1 tags on a page

Google has recently removed the instructions on usage of H1 tags. This gives us all an opportunity to target more keywords/phrases but in an ethical manner. - Don’t use URL Fragments

John Mueller from Google has recommended that using URL Fragments for content, in general, should be avoided. - The Panda update is also about Discerning the Website Architecture

Google informs that the Panda update also gauges a website’s quality on the basis of its structure. It emphasizes the importance of an optimum website structure. So empower your entire website with a rock solid architectural foundation. - As of now AMP is not a ranking factor but it improves the overall website speed

Google affirms that AMP is great to make your site more user-friendly on mobile but it is not a ranking factor. However, if the site’s canonical version is set to your AMP pages, then Google will consider its quality and assess your website accordingly. - One should use rel-nofollow while linking to bad websites

As per Google, if you have to link to bad sites so as to corroborate your point or to use the reference as an example, it is advised to use the rel=’nofollow’ attribute. - It is not advisable to build backlinks from Google Docs/Excel sheets

John Mueller advises not to build backlinks from google sheets or docs. It’s better to invest in quality content that helps your customers instead. - Not Found URLs are not always due to a Webmaster’s mistake. Google Bots can sometimes find unwanted URLs

Google acknowledges that 404 Errors may not always be the Webmaster’s fault. A Googlebot may sometimes traverse to unwanted sections of a site. - Mobile First Index is still being tested and is not live yet.

Google’s Mobile first index is still in its experimental stage and not fully live yet. - There is no single method/formula for gaining top search engines positions

Top Google positions are a result of several diverse factors that are dependent on the ranking capacity of a particular website which is further subject to Google’s algorithms. This means that there’s no single formula for a webmaster to depend on, in order to achieve better rankings. - Nofollow tag may help manage the crawl budget of large websites

Google has assured that you need not worry about crawl budget unless you have a site with an insanely large number of pages. Nevertheless, it is still a smart decision to use the nofollow attribute to manage your crawl budget if the site size is large. - Google takes manual action against spamming the Rich Snippets

Google has stated that if Google finds you violating the Rich Snippets Guidelines, your page could be subject to punitive action from its team, which is not a nice thing. - A Website Penalty can be seen by a Webmaster only and not by any third party tools/software

According to Google, only the webmaster or anyone who has access to a website’s Search Console can see if his/her site is penalized. Third party tools claiming so may not be trustworthy. - There is no such thing as the Google Sandbox

The existence of Google Sandbox has been debated since 2004. Google has repeatedly claimed that there’s no such thing or feature as Google Sandbox. - Submit URLs you want to index in Sitemaps

Add those URLs in the sitemap that you want indexed on Google so as to get maximum traffic on them. Google recommends avoiding submission of non-canonical and unwanted URLs in a sitemap to ensure that search engines are not presented with confusing information. - A link will be considered as Paid if we are giving Charity or Donation for link exchange

As per Google, a backlink earned by paying donation or charity will be considered as a Paid Link. Google’s latest algorithms are smart enough to discern authentic links from paid ones and may frown upon you for engaging in such activities. - Changing URL Structures and Switching to HTTPs URLs are completely different

Google informs that moving from HTTP to HTTPS is distinct from changing the URL structure (for e.g going from numeric URLs to keyword based URLs). It is advised that one should not make both changes simultaneously. - Google allows using different markup formats for structured data

As per Google, different schema formats (microdata, RDFa, and JSON-LD) can be used to markup content. You are free to choose any format type for schema markup tags or even mix it up as long as the resulting output remains the same. - Google ignores HTML lang attribute completely

There is no benefit of adding the Lang Attribute according to Google. Google has confirmed that they ignore it completely. However, there is no harm if you have already added it. - Content within Tabs will be given full SEO weightage

Several sites have content present in tabs or collapsible segments. For example – Ecommerce stores contains content in the form of reviews, descriptions, and other information that may appear in different Tabs. Google has stated that it gives full SEO weightage to content present under the tabs, regardless of their positioning or structure on the page. - Fast Crawl Rate setting won’t help with SEO rankings

Increasing the Crawl rate speed in the Search Console will not affect rankings as per Google. - Webmasters can remove links from the Disavow file

It is alright to remove domains from your disavow file if they are no longer unnatural, live, and have been processed. However, if you can still derive any value from the unnatural links to your site, it’s best to keep them in your disavow file. - Domain Authority or Score is not a signal in Google’s algorithms

Domain Authority is one of the most commonly referred metrics by SEOs to denote a site’s authority. But it is not to be considered as an actual metric or signal deployed by Google as the search engine confirms. It’s just a term coined by Moz. - No big SEO advantage with HTTP/2

As per Google, there is no big SEO advantage of using HTTP/2 as of now. The primary benefit of using HTTP/2 is that it improves a site’s page loading speed, which results in happier visitors. - No need to have an XML sitemap for AMP pages

According to Google, there is no need to submit an additional sitemap for AMP pages. Google employs the rel-amphtml attribute to understand them. - International and Multilingual links are not considered as spam

Google doesn’t consider international and multi language links as spam as long as they are of good quality and follow Webmaster Guidelines. - Site Command will show rich snippets if Webmaster has implemented them correctly

Google informs us that its site command i.e. site:https://www.cueforgood.com may show in the rich snippets result if it has been implemented correctly on the website. Otherwise, you can refer to the Google Search Console structured data report to check errors or warning messages. - Google does not support rel-canonical tag for Images

Google has confirmed that they do not support the rel=canonical attribute for images. - Google considers singular and plural words as different keywords

A keyword’s singular and plural variation can be treated as two different entities by Google. Use them wisely in your Content Marketing plans. - No Penalty for linking out to external websites

Google will not penalize content with links going out to external websites as it has confirmed. Linking out to useful information and relevant resources can, in fact, further benefit the readers and users. Also, note that linking to external websites is not a mandate and you will not incur any penalty if you choose not to. - Upload Disavow File on Canonical version of the website

Make sure that you upload the Disavow file on the canonical/preferred version of the website. Google advises that the disavow file should be in the preferred version of your online property/website. - Product Pricing is not a ranking factor as per Google

E-commerce Stores are not ranked on the basis of offering/selling products at a low price. Product pricing is not a ranking factor in web search according to Google. - Backlinks shown in Search Console are crawled in Google’s index

Google informs that the links shown in Search Console are generally crawled by Google and are included in their index. In case a URL is missing, it may be due to the fact that it has the noindex tag, or could possibly be hacked and thus does not show up in the search results. - Hreflang won’t improve search engine rankings of the website

Hreflang tag simply serves the purpose of showing language based results in Google (geo based search). Google has confirmed that while it’s important to add a Hreflang tag in a multilingual website, it does not affect the rankings. - Using Underscores or Dashes in the website URLs does not pose any SEO threats

A webmaster can use dashes or underscores in a URL. No particular version is preferred or recommended for a good SEO score as per Google’s claim. - No way you can redirect a penalized site safely. There is no escape from Google!

There is no method to safely redirect a penalized website to another version according to Google. It can be considered as cloaking, which is against Google Webmaster’s guidelines. - Different Google algorithms impact a website differently

Google says that it may negatively impact a website on the basis of one algorithm, while positively marking it on another. For example, a quality link profile can be favored by Google Penguin or a link specific update, but shoddy content can prove harmful for the website and result in a negative assessment by Google Panda update. - Mention Alt tags in the same language as that of the content

Google advises that one should mention Alt tags on the page in the same language in which content is written. For example, if your content is in Spanish, add Alt tags in Spanish. It helps prevent any confusion for Google crawlers and is also useful for the user. - Google does not provide any SEO Certifications to Webmasters

Google has clarified that there are no SEO certifications that it issues. They do have Google Analytics and AdWords certification but not SEO certifications. - There is a slight SEO advantage in having keywords in the URLs

As per Google, back in 2008-10 Webmasters did focus on including keywords in the URL, but today it is not a considerable factor at all. Google Algorithm is smart enough to understand other elements on the page, such as the type of content, backlink signals, domain age, etc. So, keywords in the URL are not essential anymore. - Outbound links are not considered as a ranking factor

Google clarifies that adding links to Wikipedia or other official and high authority resources on the topic does not help in achieving higher ranks. It may provide information and add value for the user, but has little gain in terms of search engine rankings. - Google does not index images as frequently as it does web pages

According to Google, images don’t change very frequently as Google does not index them as often as other HTML pages. This could be the reason why the saturation of HTML pages and that of image URLs varies. - Title Tags are not a critical SEO ranking factor for Google

There has been a great emphasis on Title Tags of a page since the emergence of the SEO field. However, as per Google, Title Tags are not considered as a critical ranking factor. In fact, Google sometimes shows different Title Tags based on the query/keyword search performed. When it comes to search queries, it’s Google that leads the way and not the Webmasters. - Using Special Characters and Decimal points in the URL is not a very SEO-friendly practice

According to John Mueller, it is better to avoid special characters and decimal points in URLs. However, there are a few pages with such URLs that may still rank higher in Google on a specific set of queries. - There is no SEO advantage of using capital letters in the URLs

Google informs that there is no benefit of using capital letters in the URL. Capitalization does not affect rankings. So it’s better to avoid them and keep the URLs simple. - Links Disavow file size has a limit of 2MB

As confirmed by Google, the Links Disavow file has a size limit of 2MB and 100,000 URLs. - There is no direct correlation between crawl frequency and higher rankings

There is no direct relationship between crawl frequency and higher rankings and Google has confirmed the same. So basically, if a page gets crawled on a daily basis, it doesn’t mean that it will rank higher. - No need to set preferred domain settings if redirects are correctly implemented and pointing to the preferred versions

Google has clarified that there is no need of a preferred domain setting in the Search Console if all redirects are implemented correctly. This means that Webmaster should direct non-preferred URLs to the preferred ones correctly. - A website can incur both types of penalties; Manual Action and Algorithmic

Google has confirmed that it is possible for a website to be penalized both by Manual Action as well as algorithmic judgment. For example, a penalty may be imposed algorithmically by the Google Penguin update and also by a manual action for schema markup spam. - Move Disavow file when migrating to HTTPS version. Reupload it to HTTPs preferred version

Google recommends that the webmaster should move the disavow file from HTTP to HTTPS version once the website migration is complete. It is important to upload the disavow file on the HTTPS version. - Google won’t disavow domains when you disavow IP Addresses

Google informs that when disavowing domains, it is important to mention the domain name. Google will not process the disavowed domain if it is mentioned as an IP address. So avoid making this mistake. There are some good online tools available that you can use to validate the disavow domains. - It is best to keep the 301 Redirects active for a time period of one year

It is a good practice to keep the 301 redirects links active for a period of 1 year. John Mueller has confirmed that Google may take up to 1 year to fully understand the 301 Redirects destination. - Social Signals do not influence page rankings

Google has clarified several times that social signals do not impact Google rankings. Google may acknowledge links from social network websites and rank the content published on them but beyond that, there is no direct relation between social shares and better rankings. - Google never indexes all the website pages

Google made it clear that they never index all the website pages. Of course, it might not be true for websites with 5 or 6 pages as Google may index all of their pages. But for large scale websites, Google doesn’t index all the pages. - User Engagement on a website is not a ranking factor

Google has clarified that visitor engagement on the website is not a ranking factor. A user may view different pages, create an account and sign up on the website, but all these actions won’t affect ranking. - According to Google, 404s do not attract Google Panda penalty

You’ll not be penalized by Panda for 404 errors as per the information shared by Google. As stated on multiple counts by Google, 404s do not affect your site generally but a large number of 404 errors may deteriorate user experience. - Bad HTML validation is not a threat to rankings but can impact structured data

Invalid HTML does not impact your site’s ranking adversely as per the information shared by Google. There is a chance it may impede its crawling. One should also validate the structured markup implemented as well. - Links within a PDF document will pass PageRank

Google’s Gary Illyes has confirmed that the links within a PDF file are considered valuable. So, if you are linking to website pages from a PDF file, it is still counted as a link. - Google treats Errors codes (429 and 503) in the same manner

Google has confirmed that it treats error codes 429 (when a user has sent too many requests in a stipulated time period) and the 503 (when the server is currently unable to handle requests) identically. - Put rel-nofollow link on Web Design by Links

According to Google’s John Mueller, if any Webmaster has a doubt or fear about the un-natural backlink, it is better to put a rel-nofollow tag on the Footer Web Design/Development by links. - Disavow file syntax in uppercase or lowercase doesn’t make a difference to Google

Disavow domains with names in uppercase and lowercase are allowed by Google. As has been confirmed, you can use any format that you like. So basically, domains Example.com and example.com are equally good for Google. - Add pagination on infinite scroll pages to get them indexed

Google informs that for a giant page with an infinite scroll, it is better to add pagination to the pages. If the page keeps loading forever, Googlebot may stop crawling it further. - Change of Address tool helps with Website Domain Migration

Moving to a different domain? Google informs that webmasters can use the Change of Address tool to speed up the process a little by giving Google a loud and clear signal. However, it is not required when executing HTTP to HTTPS migration. - Googlebot can follow up to 5 redirects at a time

During website migrations, one may end up having multiple redirect chains. According to Google, they can follow up to 5 redirects at a time. Anything above this number is not good! To make it easier for Google and to be on a safer side, keep it simple which means Page A –> B. - There is no guarantee of indexing from Sitemaps

Even if the Webmaster has submitted a sitemap, there is no guarantee that Google will index those pages as per the statement shared by the search engine. The indexing of a page depends upon a number of other factors like content, backlinks, internal links etc. However, it is important to generate the XML sitemap and submit it, otherwise, it becomes difficult for Google to find the pages. - Google keeps on updating and improving its algorithms

Needless to say, Google keeps on making changes to its algorithm to provide a better experience to the searchers and it has shared these modifications time and again. We all know how Google has evolved in the last couple of years. Featured Snippets, Schema Markup, Instant Search (retired recently) etc. are some decent examples. Way to go Google! - Google picks up title tags automatically and presents them in a better way

When it comes to showing a Title Tag based on search query, Google is smart enough to customize them if it deems fit. So if you notice different Title Tags, do not be surprised! - A Domain’s age is not a ranking signal

A myth that’s been a part of the SEO industry for a long time has been busted by John Mueller who confirmed that domain age is not a parameter for Google. Neither is Domain registration length. - Robots.txt file size should be smaller than 500KB

John Mueller confirmed that the Robots.txt file size should not exceed 500 KB. The 500KB seems sufficiently large but it would be interesting to know what could happen if one’s robots.txt exceeds this limit.

We hope that you found some useful information from our list. If there is any other update, query or statement you think deserves a place here, do share it in the comments section or contact us at [email protected].

Disclaimer: The information above has been compiled from trustworthy sources like SERoundtable, Google Webmaster Blog, and Official Google Spokesperson. However these are not to be taken as the final word of Google and if you have any questions or doubts, I would recommend following the Google Webmaster Guidelines. As an SEO, I suggest you tread with utmost caution and also research-based knowledge.

- About the Author

- Latest Posts

I am an organic search specialist who gets excited about search engine algorithms, updates, and best practices like a fan who gets front-row tickets to their favorite soccer game! And I am pretty obsessive about ROI based SEO and creating customized marketing plans for different clients. Apart from this, I like to enjoy good food and travel.

7 Replies to “71 Google-Ordained SEO Guidelines You May Have Overlooked”

Add a comment

-

Jiva’s Organic Traffic Growth: 354% Surge in 6 Months | CueForGood

by Nida DanishSummary: Jiva’s efforts to empower smallholder farmers weren’t gaining the digital traction they deserved. With a strategic overhaul led by …

Continue reading “Jiva’s Organic Traffic Growth: 354% Surge in 6 Months | CueForGood”

-

What We Learned When We Switched From Disposable Tissues to Reusable Napkins

by Nida DanishAt CueForGood (CFG), we’ve embraced a refreshing change: reusable cloth napkins. While the switch may seem minor, it’s rooted in …

Continue reading “What We Learned When We Switched From Disposable Tissues to Reusable Napkins”

-

Of Light, Laughter & Transformation: Diwali 2024 at Cue For Good

by Nida Danish

On any given day, walking into the Cue For Good office feels like stepping into a space with heart. It’s …

Continue reading “Of Light, Laughter & Transformation: Diwali 2024 at Cue For Good”

-

Why PHP Still Matters in 2024: A Look at Its Continued Relevance

by Girish TiwariAt its peak in the early 2010s, PHP powered the majority of websites globally, including major platforms like Facebook and …

Continue reading “Why PHP Still Matters in 2024: A Look at Its Continued Relevance”

-

How Meta’s New Holiday Ad Features Can Transform Your Business This Season

by Charanjeev SinghThis year, Tapcart’s 2024 BFCM Consumer Trends Report suggests that nearly 60% of shoppers kick off their holiday shopping in …

Continue reading “How Meta’s New Holiday Ad Features Can Transform Your Business This Season”

-

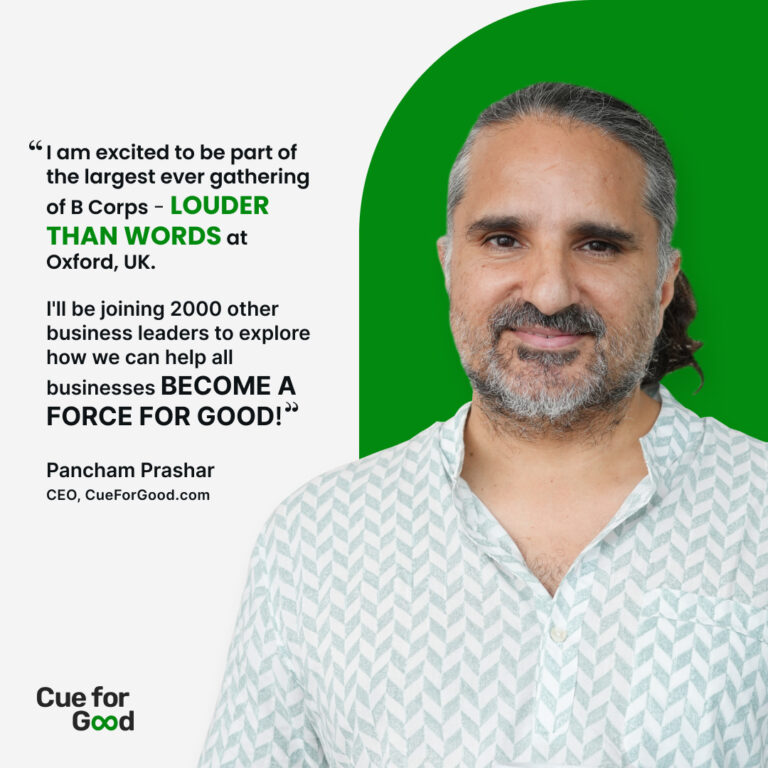

Cue For Good’s Journey at the B Corp Festival 2024: Exploring Louder Than Words

by Pancham PrasharOn September 10th and 11th, 2024, I had the incredible experience at the “Louder Than Words” B Corp Festival, held …

Continue reading “Cue For Good’s Journey at the B Corp Festival 2024: Exploring Louder Than Words”

A Google help page says: “We recommend that you use hyphens (-) instead of underscores (_) in your URLs” at: https://support.google.com/webmasters/answer/76329?hl=en. Matt Cutts did say at one point in time that either could be used, and he later retracted that. I tell people that hyphens are preferred over underscores. Google segregates words that are separated by Hyphens, but no underscores..

Have you ever considered creating aan ebook or guest authoring on other websites?

I have a blog based on thhe same information you discuss and would love to have you share som stories/information. I

know my readers would enjoy your work. If you’re even remotely interested, feel free to send

me an e mail.

Thanks for the invitation and sharing my post with your readers.

Cheers

Ajit

well nice juicy post….well written ajit. i would like to add something to this which i guess you might have missed that one specific study on Google’s rankings determined that the first page of its SERPs with the top spots were all above 2,000 words. In fact, I rank #1 for so many of the most competitive searches, and almost all of those articles are over 2,000 words. Length of the content too is a deciding factor somewhere, we cant ignore it.

Hi, Loveneet!

Thank you for the suggestion. We intend to keep on updating this post with more information as we go. Informative and unique content is indeed one of the keystones for ranking on Google. Hope you’ll keep coming back with more suggestions.

Regards

Ajit

wow, very nice tips and I agree

Thank you, Michael! I hope you enjoyed the post.

Regards

Ajit